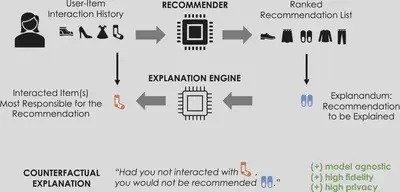

We develop a post-hoc, model-agnostic explanation mechanism for recommender systems. It returns counterfactual explanations defined as those minimal changes to the user’s interaction history that would result in the system not making the recommendation that is to be explained. Because counterfactuals achieve the desired output on the recommender itself, rather than a proxy, our explanation mechanism has the same fidelity as model-specific post-hoc explanations. Moreover, it is completely private, since no other information besides the user’s interaction history is required to extract counterfactuals. Finally, owing to their simplicity, counterfactuals are scrutable, as they present specific interactions from the user’s history, and potentially actionable.